Designing Next Generation Exhaust Aftertreatment Systems Using Machine Learning

Contributed Article

Upcoming regulations in Europe and US are aiming to significantly reduce tailpipe NOx emissions from modern diesel engines used in both passenger car and heavy-duty trucks. For the HD trucks, as an example, California will require a 90% reduction in NOx emissions over a broader operating range, while also expecting increased durability. Read here for details on the Low NOx Omnibus rule, as background.

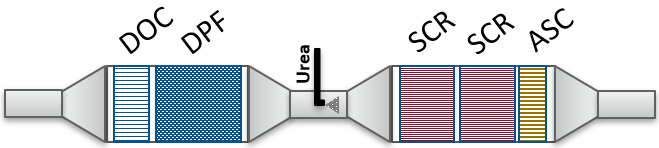

Selective catalytic reduction (SCR) of NOx using ammonia is a well proven technology for converting NOx to N2, and researchers are focusing ways to improve the efficiency, especially at low temperatures. Both engine improvements and new after-treatment designs are being advanced.

One technology at the heart of any solution, is the dosing system for injecting urea into the exhaust stream, shown in the picture above. Urea decomposes to form ammonia, which is the reductant of NOx. For optimal performance, it is very important to ensure urea evaporation, decomposition and uniformity of ammonia as it reaches the SCR catalysts. While CFD studies are routinely employed to address these topics, data-driven/Machine Learning (ML) techniques are increasingly being used along traditional modeling tools to reduce development costs and timelines in various applications. This article provides a summary of a recent paper by a leading OEM, which discusses such an approach.

If you are new to the world of urea injection, here is a good primer from DieselNet. (It is well worth getting a subscription to read the full article and other technical information).

Background

ML applied for DEF doser design optimization

Singh, S., Braginsky, D., Tamamidis, P., and Gennaro, M., “Designing Next Generation Exhaust Aftertreatment Systems Using Machine Learning,”

SAE Int. J. Commer. Veh. 13(3):215-220, 2020, doi:10.4271/02-13-03-0016. ISSN: 1946-391X e-ISSN: 1946-3928

Researchers at CNH Industrial, a major OEM in the off-highway sector, wanted to improve the design of their existing Diesel Exhaust Fluid (DEF) doser in order to increase evaporation rates of liquid DEF and improve overall NOx conversion performance of their SCR system. Traditionally, such a study would involve comprehensive CFD (Computational Fluid Dynamics) simulations, which can be computationally expensive and resource intensive.

Using a large body of previously run CFD experiments, the researchers trained a ML model that takes as inputs the design parameters of their baseline DEF doser and uses the CFD-simulated results of the evaporation rates of liquid DEF as labeled data for the model. This allows the ML model to act as a stand-in for CFD, allowing much faster turnaround times on experimenting with various combinations of the design parameters of the doser. To converge on parameter values that yield the highest evaporation rates, the ML model was coupled with a Genetic Algorithm (GA) to rapidly scan the large design space for the optimal design parameter values. The GA is designed to model how a species evolves with each generation. It selects the top 50% best performing combinations of inputs, while rejecting the bottom half. The top half is then cross bred to create another full set of inputs and the process is continued until the optimization target is achieved (maximization of evaporation rates over X executions).

To further increase robustness of this method, a random mutation is introduced every X executions of the GA. The overall result of this combined ML/CFD approach is a much faster convergence on the set of parameters that yield the highest evaporation rates. The converged design parameter values were then validated with a full CFD run and the results were shown to be within 7.5% of the predicted value. Overall improvement over the base doser design was shown to be around 13%, but the real value of this method is in the brevity of computational resources and time taken to get to the optimized design parameters values. Instead of performing a CFD run for every single permutation and combination of inputs, the researchers were able to quickly reject a large body of suboptimal combinations to arrive at an optimized set of values in a fraction of the time.

Techniques such as these are expected to grow in scale and scope as computational prowess and proficiency in data-driven methods continues to increase across all fields. If you are a researcher or working professional using tools that model complex physical/chemical systems in your current line of work, it may be worth hunting for areas in your project(s) where computationally leaner tricks such as the one discussed here can help reduce computational overhead and developmental costs.

Other recent posts

China Stage 4 Fuel Consumption Standard for Light Commercial Vehicles

A summary of Chinese Stage 4 fuel consumption standards for light commercial vehicles.

Mobility in India – excerpts of recent headlines

A summary of reports from a couple of leading newspapers in India, taken during end of Sept to early October, 2024 points to the rapidly changing mobility and energy landscape in India.

Biofuels summit in Washington DC highlights role of ethanol in transport decarbonization

The biofuels summit hosted by Growth Energy at Washington DC highlighted the role that ethanol continues to play in decarbonizing the transport sector.

Like it ? Share it !